Chronic disease testing and the future of personalized medicine

Watch this on-demand webinar to explore a complete workflow for the development of targeted biomarker measurements for precision medicine

2 Sept 2021

Personalized medicine for improved human health outcomes will depend on the availability of better clinical research tests. These tests will incorporate data from multiple protein biomarkers and combine this with existing clinical research to provide subject-specific individual ‘scores’ and inform clinical decision making.

In this SelectScience® webinar, now available on demand, Prof. Stephen Pennington, professor of Proteomics at University College Dublin and founder of its spin-off company Atturos, is joined by senior scientists Dr. Orla Coleman and Dr. James Waddington. Together, these three experts outline the significant clinical need for better chronic disease testing and explain how this need can be met through an integrated workflow that enables simplified, robust, reproducible, and cost-effective analysis of readily collected human samples. Using supporting illustrative data, they also demonstrate how it may be possible to use clinical research measurements for precision medicine.

Watch on demandRegister now to watch the webinar at a time that suits you or read on to find highlights from the live Q&A session.

Q: What was the experience adapting automation into your workflow?

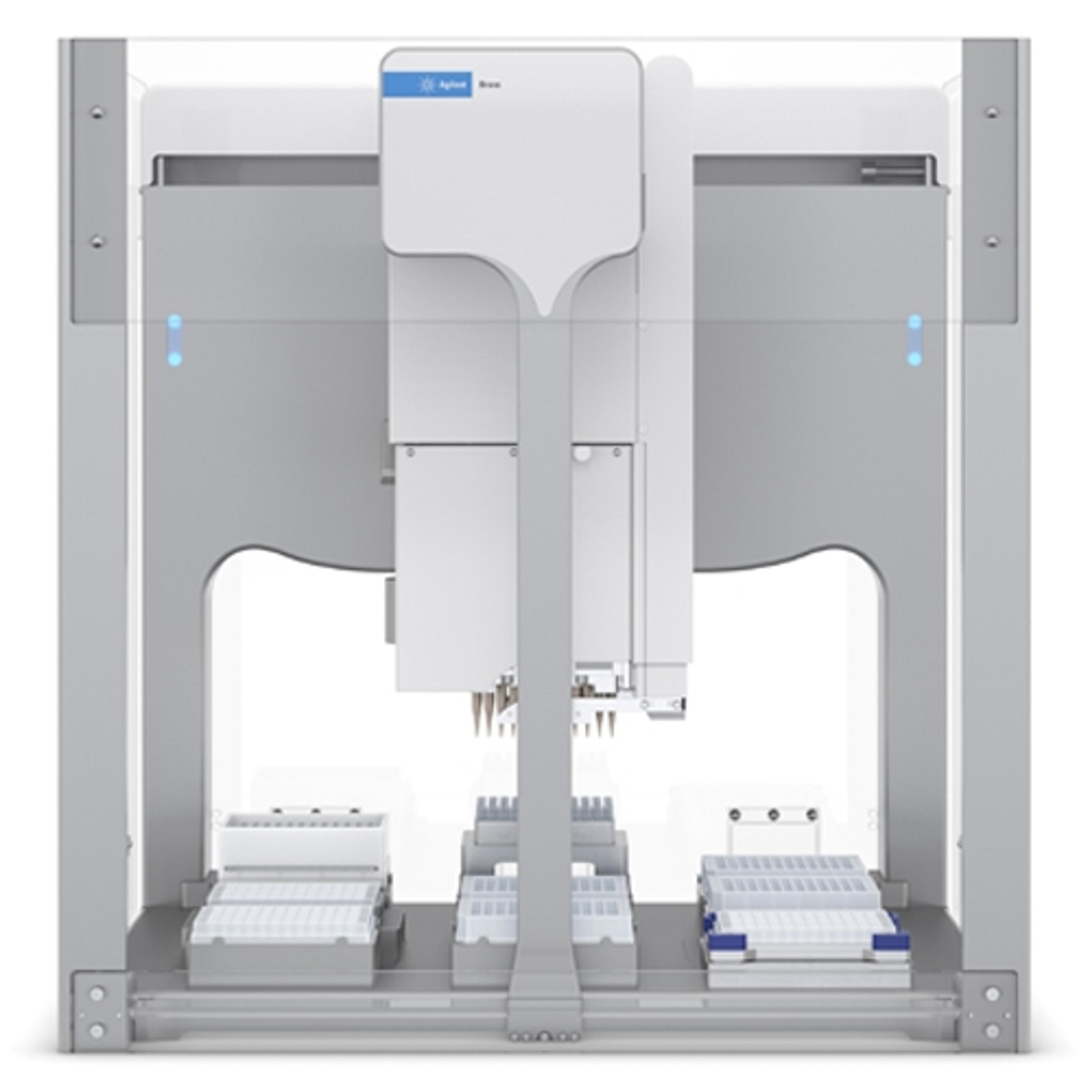

OC: Luckily we had the Bravo installed last January, right before the pandemic hit, meaning we had some of the Agilent automation specialists come to our labs. There's no doubt that helped us in terms of getting of getting the Bravo up and running. But really what has made it so easy for us to implement the automation is the interfaces that come with the Bravo. There are two main interfaces, the easiest of which is called the Protein Sample Workbench. Here, there is a number of preloaded utility and workflow applications, everything from affinity purification for phosphopeptide enrichment, fractionation, or protein digestion protocols all already predefined in the application. We were very easily and quickly able to use the workbench interface to adapt our SOP for manual digestion into the Bravo digestion application. But there is a lot more you can do with the Bravo. For example, in the VWorks interface, you can set up and design the layout of all the plates, etc., you're using. So, it has been very easy for us to introduce Bravo automation into our workflow.

SP: I’ve also seen that with the Bravo, the various individuals in the lab who may use the instrument are able to do so very easily. With other liquid handling workstations, very often the software is extremely complicated, it takes a lot to learn, and it's prone to bugs in the processes that are developed. That's definitely not the case on the Bravo. Individuals seem to be really comfortable fronting up to the instrument and using it on a day-to-day basis.

Q: Have you tried to reduce the sample prep time by doing fast trypsin digestion and/or admitting the reduction in alkylation steps?

SP: At the moment, the digestion time is 16 hours. That's been fixed, and we do stick with that very closely. We know that for individual proteins, the peptides are digested and released from those proteins with different time courses depending on the protein and on the peptide. So, if you change the time of digestion you'll change the relative levels of those peptides, which in itself isn't an issue as long as you stick to a particular time. We're often asked whether we can reduce the overall workflow time and what the time is from a sample being in the lab to the data and the report being generated. People obviously think that it's important to get that as fast as possible. Our argument has been that we want to perform the workflow as reproducibly and as consistently as possible. Sometimes, making things faster doesn't necessarily make them better. We think it's important to note that when we provide the report of the data to the clinician, that's done in a turnaround time of a couple of days. That aligns well with the clinical management workflow.

Everything that we've done to date is based on 16 hours and if we were to change that at all, then everything would change. We'd have to redo the validation studies of the assays that we're developing to show that they are consistent. It's therefore really important in this routine mode to not attempt to introduce any changes to the workflow. If you're going to introduce changes, that could be done in a background research mode to establish the impact of the change. We don't think that reducing the digestion time would have a significant advantage for the assays that we're developing.

Q: For routine use, are you going to convert the MRM assays to immunoassays?

SP: There is a great temptation to convert from an MRM assay to an ELISA because ELISAs can be done easily in many laboratory environments and they don't require sophisticated specialist equipment. The problem is if you're developing new biomarkers, there may or may not be ELISAs available to the proteins that you're interested in. This means identifying suitable antibodies and developing an ELISA can take a long time even for a single protein. This is then even more complicated if there are multiple proteins that you need to measure.

The panel of proteins that can separate rheumatoid arthritis from psoriatic arthritis is a subset of the PAPRICA panel. 90% of the sensitivity of that assay is built around 20 proteins. If we were to convert to an ELISA, it would have to be an ELISA that could measure simultaneously, or ELISAs that measure simultaneously, 20 proteins. The time and cost associated with the development and subsequent use of multiplexed ELISAs are very significant — by comparison multiplexed MRM development is quicker and cheaper.

Very often the challenges of developing ELISAs to panels of proteins have resulted in attempts to minimize the number of proteins that are incorporated into the multiplex assays. Unfortunately, it seems that as you move to using the assays in new sample cohorts, some performance of the assay is lost. Keeping the assay open to measuring multiple proteins by MRM obviously means that you can maintain the performance. The challenge is that the assay needs to be run on a mass spectrometer and, to be able to do that robustly and reliably, you have to develop these routine workflows. However, these are cost-effective and can be multiplexed much more easily than ELISAs.

Q: Can a mass spec proteomics lab seamlessly integrate Bravo automation? Are there any factors to consider?

OC: I suppose one thing that you need to consider is it is an automated platform, but there are still steps where you do need to intervene. You cannot just set it up and walk away. There are some steps where staff need to intervene.

SP: Most proteomics labs will have experience of the sophistication of mass spectrometers and the challenges of mass spectrometry. Relatively speaking, the Bravo is a very straightforward and simple instrument. I think there's a big motivation for incorporating into the lab because the Bravo takes away from a lot of the repetitive, manual, potentially frustrating, certainly time-consuming steps and performs those very efficiently and effectively.

JW: The only other thing perhaps to consider is there is a cost associated with the consumables that you need to use on the Bravo and the impact of dead volumes for reagents like trypsin. We’ve modified the workflows slightly, to minimize loss of reagents through dead volumes, etc., and it has worked well.

To learn more about the development of targeted biomarker measurements for precision medicine, watch the webinar >>

SelectScience runs 10+ webinars a month across various scientific topics, discover more of our upcoming webinars>>