Digital obsolescence: Is your research data at risk?

Experts discuss how technological advances result in a ‘graveyard of software’ and describe a compliant solution designed to safeguard vital data for generations to come

15 Aug 2021

As computing technology evolves at ever-increasing speeds, so too does the threat of digital obsolescence. Outdated file formats, hardware, and software are making it almost impossible to read some older data formats, rendering decades’ worth of important data inaccessible to future generations. In this interview, we speak with Dr. Natasa Milic-Frayling, founder & CEO at Intact Digital, and Cate Ovington, Director of The Knowlogy, to find out more about technology obsolescence and how to mitigate it effectively and avoid the risks it presents to digital data. Milic-Frayling and Ovington detail a solution designed by Intact Digital to help ensure digital continuity, enabling vital data to be used in its original form and remain available to the scientists of the future.

Please introduce yourself and tell us a bit about your roles

NMF: Intact Digital is an Information Technology company based in the UK, working with clients worldwide. I founded Intact Digital because of the fundamental problem within digital technology —it becomes obsolete very fast, particularly software. The industry is constantly moving forward and innovating but leaving behind a graveyard of software that doesn't work, which then affects how we use data.

CO: I've been working in the pharma industry for over 20 years, primarily preclinical, but also supported clinical laboratories. I've been an audit consultant for the past five years, working globally, and in 2020 founded The Knowlogy, supporting laboratories' research and development teams in the challenges of quality systems, data governance, data integrity, and regulatory requirements.

What challenges are your customers currently facing?

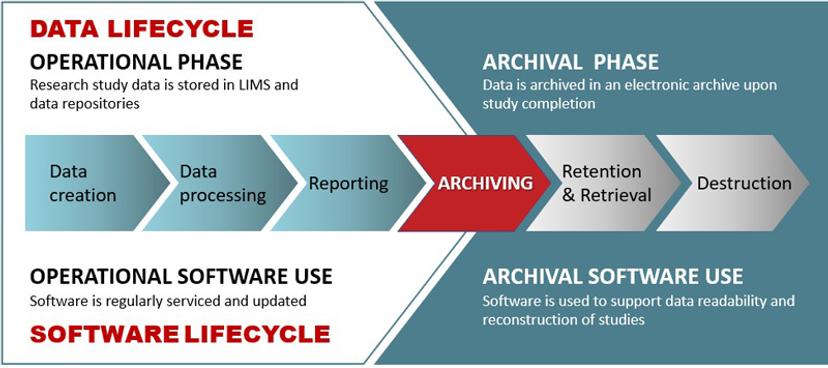

CO: Thinking of those working with pharma, in research, they are creating vast quantities of data. Technology is moving so fast and getting updated all the time. We're finding, the research they've done five years ago is becoming inaccessible because the technologies have moved on so far — they can't open those data files. The software they need is not working anymore. We’ve got compliance issues too, which means that data must be kept for a specified period of time, often decades, and it still needs to remain accessible in its original form. Obviously, you can't do that if the technology is moving on so rapidly and old technology becomes unusable.

NMF: Data readability is now a common issue. Digital technologies have been in use for a few decades now. Many scientific breakthroughs are unimaginable without modern computers, and it is so important to transfer that knowledge. Let’s take the current COVID situation, which is going to be with us, it seems, for a while. The previous SARS epidemic, another coronavirus outbreak, was from 2002 to 2004. If the researchers from that time were to transfer digital data and lab results to us in this present situation, we're looking at research that is almost two decades old. Researchers who are now dealing with Covid19 will need to save research data for the next few decades and beyond. It's all in digital form. No studies and no results will be readable without analysis software used in the studies. That’s why we focus on software and provide INTACT Software Library to keep the software safe and functional. It is all about creating that continuity in knowledge transfer to our future generations, particularly for pharma, which is a critical sector for discovering treatments and saving lives.

How does INTACT Software Library help overcome these challenges?

NMF: Our expertise is in computing and IT, and we use state-of-the-art techniques to handle problems with aging technologies. We provide a solution that enables researchers to read their data for as long as they need it. It doesn't matter how old the data is, it doesn't matter how old the software is — researchers can access and process the data safely and reliably.

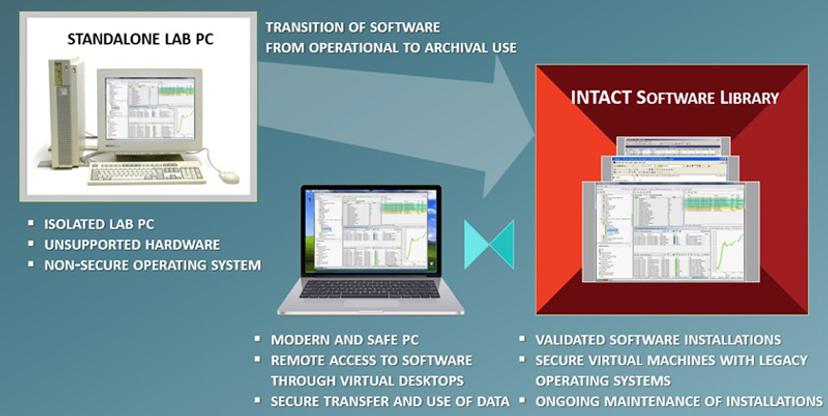

Perhaps to clarify a bit what the general problem is: the software becomes unusable because the computing environment changes. Suppose that your lab just got new computers; some of the software that was used in research may not be possible to install and run on new computers.

Currently, the most common way of dealing with that is to keep the old lab computers with the software. But the computer hardware will inevitably fail over time, so it will no longer be possible to use software and read the data. Even sooner than that, the operating system becomes obsolete, so the computers and the software become non-secure. That then becomes a security risk and these old computers must be isolated from the Internet and the rest — we keep hearing about instances where hospitals have been attacked by viruses and ransomware; horrible things can happen because of these vulnerabilities.

So, in our solution, we create software installations that overcome the problem of hardware and of security. Essentially, we create your own library of pre-installed software in our secure data center — you can easily use old software remotely from any modern, secure computer. We enable secure input of data into the software and, from there, you can reproduce the data analysis. And that is done for research studies that are decades old.

How do you manage working with old software, considering all these issues?

NMF: When we create installations of software in our data center, we must think about three aspects: the first is the technology, the second is licensing and regulations, and the third is human factors — related to both the users of old software and our IT specialists who maintain software over a long time.

Perhaps most interesting is the regulatory aspect. We make sure that all the software installations follow the same steps as when the software was installed in the lab, except that instead of physical computers with the hardware we use so-called virtual machines — think of them as computers without its own hardware; it is a ‘software computer’ that can run on other ‘host’ computers and appear as virtual desktops.

So, we aim for software installations that are validated and compliant with your IT policies and we then take care of their maintenance. We put in place a service agreement that specifies precisely how we take care of the software and the old operating systems that are required, and we constantly monitor the changes in the computing ecosystems. If there are any new risks of obsolescence, we inform you and take action to mitigate them. In fact, we have developed a systematic approach to assessing and mitigating risks of digital obsolescence through our INTACT Digital Continuity services.

How do INTACT Digital Continuity Services help support customers?

NMF: We assist organizations not only with old software but also with assessing the risk of new software, the modern software that is adopted as part of a lab digitalization or as part of the digital transformation of the entire organization. That risk of digital obsolescence must be monitored and mitigated with the right actions at the right time. The modern technology of today is the old technology of tomorrow and time passes quickly in the world of computing. With INTACT Digital Continuity services we help organizations plan and take action. Our solution was built carefully because we understood the importance of compliance scenarios, particularly when somebody has an archive that needs to stay accessible.

CO: The guidelines and the regulations demand that the original data, the raw data from instruments, is retained securely and must remain accessible for decades. What often happens is that organizations start paying attention to raw data issues when they are decommissioning instruments they are no longer using for research. Then they realize they have a real problem because now the instrument is not in use anymore, and the software needs to be taken care of for reading archived data, yet it is old.

NMF: Yes, at that point, that's typically 10 years into a service contract with the manufacturer of the instrument and the software provider, and then you may need to negotiate a license for the archival use of software. This may not be easy to do, especially if the software vendor is not around anymore. So, digital continuity is about very concrete steps that you can take to ensure that you do not fall into crisis mode. When software is purchased, that's the best time to start thinking about how to use it in the future. We provide a systematic way of evaluating whether a particular software will be critical for use in the future and at which point to include it in the Software Library because it must be ensured that the data stays readable in the future.

What are the regulatory concerns of your customers that you can help solve?

NMF: It seems that the primary benefit of our approach is in a very easy way to conduct the validation of software and to read the data; that is needed for regulatory requirements such as GLP (Good Laboratory Practice) and GCP (Good Clinical Practice) compliance. It is very easy for the archivist and the researcher to work from any location because they now have remote access, and for the regulator to check and confirm the compliance. All can see the software installation; all can see the data and use it with the software; all can see the results and compare with the reported results. Also, archived data is separate from the Software Library. Archived data is never in danger of being altered or affected in any way. The archivist takes a copy and securely imports it into the Software Library. The researcher uses it with software and that data copy is then deleted.

CO: The problem I see with clients is they adopt practices that are not compliant with the ALCOA+ principles for long-term retention of data. There is a regulatory requirement to make sure that the digital data is enduring, available, and complete for as long as it is required. For that one needs software but, as we can see, technology is moving at such a fast pace that this is a challenge for research. As a quality assurance auditor, I have not observed a long-term solution. It is great that we now have a plausible solution. With the Intact Digital approach, data is used in its original electronic form which ensures it stays dynamic and complete. People can go back and review it in the future. And it's also helpful for auditors and regulators to know that the data is still available.

What do you see as future developments in this area?

NMF: The innovation must continue, and the obsolescence of technology and software is a side effect. So, it's not something that will go away. As we continue creating new technologies, the previous become old — that’s the natural state of this world.

What we hope for in the future is the adoption of a practice that can secure long-term use of valuable data and software. That is now possible, and there is help, like the services that we at Intact Digital are providing. We believe that there will be increased awareness that the aging of technologies can be and must be managed. Digital continuity will become the new norm.

CO: I'd say future developments mean that, actually, the platform or technology used is no longer an issue. The virtual solution enables the data to be accessed in its original form. Therefore, the data you generated is still complete, enduring, and available, meeting the regulatory requirements for its long-term retention.

Find out more about Intact Digital Ltd and its INTACT Software Library and INTACT Digital Continuity Services.