Lab Management: Archiving your study data? Don’t forget the software.

Find out about the management of digital transformation and outdated analysis software to prevent loss of digital data and stay GxP compliant

6 Oct 2021

Digital transformation of laboratories and laboratory practices is an essential part of innovation. However, increased adoption of computerized systems requires careful planning of long-term management of data produced in digital form. Digital technologies, including analysis software for instrument data, become outdated and unusable within years, leaving archives with ‘orphaned’ data that cannot be accessed and used; yet GxP regulations require readability of data for decades. OECD recognizes the challenges of technology obsolescence and in its newly released guidance (20 Sept 2021) on GLP Data Integrity recommends the use of virtual computing environments to manage legacy software needed for long-term readability of archived data. But what does that mean for the day-to-day work of researchers and lab managers?

Dynamic data archiving and care

Digitalization of lab processes and increased use of computerized systems are enabling breakthroughs and impact that have not been possible in the past. However, the effectiveness of digital technologies comes at a price of rapid obsolescence that affects our ability to use digital data over extended periods of time.

Digital data in its dynamic form require corresponding software to ensure data readability and reproducibility of data analyses. As software becomes outdated and unsupported over time, it cannot be installed and securely used on modern computers. At the same time, without functional legacy software, we lose the ability to validate research that underlines all subsequent stages in innovation. Archives that include past studies are full of ‘orphaned’ data that cannot be accessed and read.

Fortunately, there are IT techniques and technologies that can be used to enable the use of legacy software. Adopting a principled approach and incorporating them into data management practices can ensure that a lab meets ALCOA+ data integrity requirements and remains fully compliant with GxP regulations.

The method involves virtualizing and validating installations of data analysis software that is used in studies. That is the recommended course of action by OECD. While the software virtualization requires a concerted effort, it secures access to data from all the studies performed with that particular version of software.

When legacy systems can no longer be supported, consideration should be given to the importance of the data, and if required, to maintaining the software for data accessibility purposes. This may be achieved by maintaining software in a virtual environment.

OECD SERIES ON PRINCIPLES OF GOOD LABORATORY PRACTICE AND COMPLIANCE MONITORING, Number 22. Advisory Document of the Working Party on Good Laboratory Practice on GLP Data Integrity (Section: 6.16. Archive)

INTACT Software Library

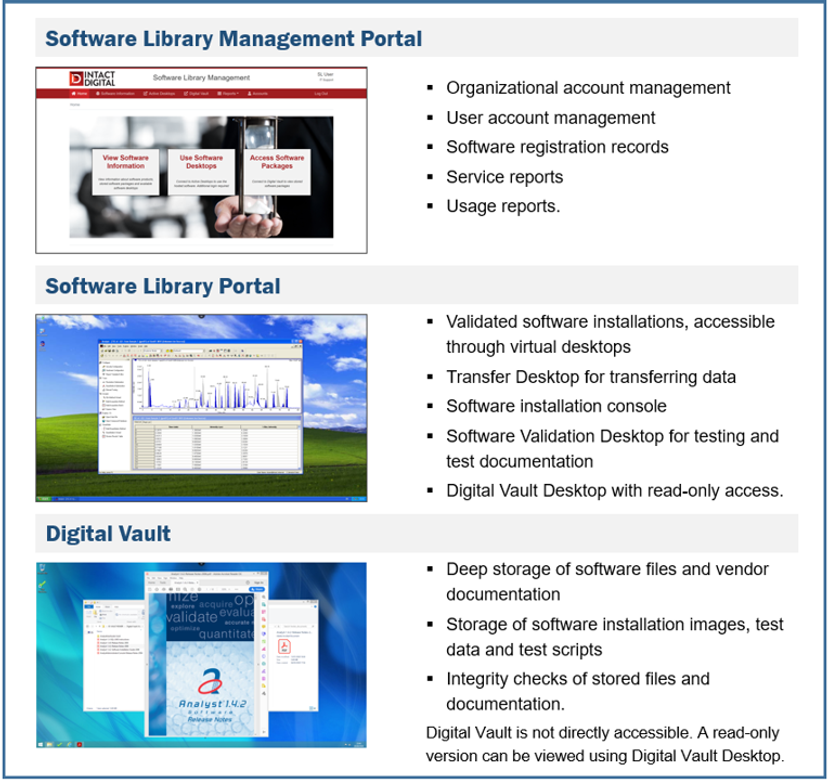

Intact Digital has created INTACT Software Library platform for hosting and long-term maintenance of legacy software. Each organization or a lab is set up with its own INTACT Software Library account that includes (1) Software Library Management Portal for registering software and users, (2) Software Library Portal for supporting all the stages of the software installation, validation and use, and (3) Digital Vault that stores the software files, installers, documentation, SOPs and test data.

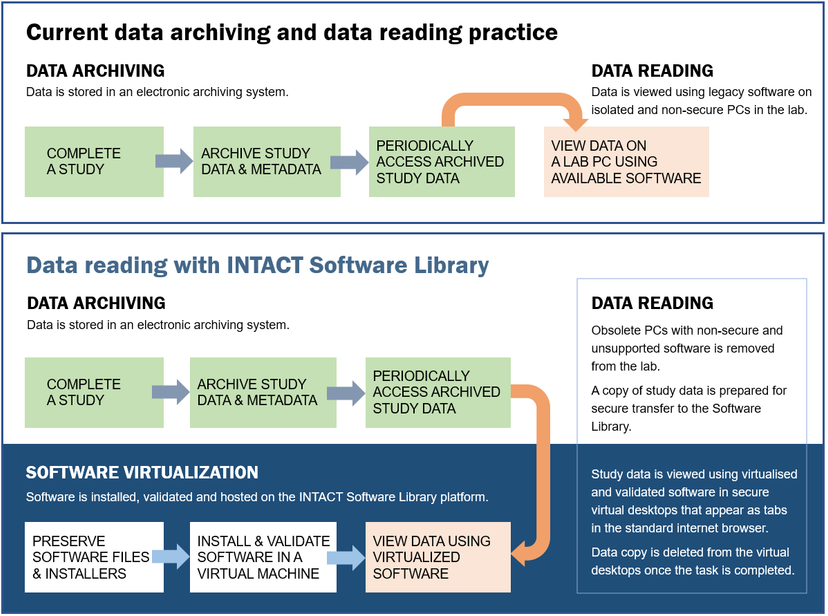

INTACT Software Library complements the data archiving systems without affecting the existing data archiving practices. It introduces and supports two aspects that relate to software management and ensure GxP regulatory compliance:

- Installation and validation of software in a stable and secure virtualized environment

- Ability to read data and reproduce studies using virtualized software instead of outdated lab software installation.

When should the software be virtualized?

Labs can adopt two approaches in managing the inevitable obsolescence of software:

PROACTIVE:

- Include software in the Software Library at the time the software is installed and validated for operational use in the lab. Virtualized software is then used to conduct periodic data integrity checks and regulatory audits of archived data.

- The virtualized installations are monitored through periodic use, optimizing quality assurance and quality control procedures.

REACTIVE:

- Include software in the Software Library at the time the computerized systems are decommissioned. Since data analysis software is also decommissioned, that is effectively the last chance to create and validate software installations needed for long-term readability of data archived during the use of the system.

- If the software is removed from the lab, there is a limited time available to establish quality assurance and quality control for virtualized installations.

In both cases, Intact Digital assists the lab and the software vendor to formulate licensing terms for archival use of the software beyond the standard service agreement and beyond the lifetime of the software product. It is important to remove obstacles to software installation and use that may arise from the digital rights management and license validation methods. Hosting software within INTACT Software Library gives peace of mind to both the users and the vendors. The legacy software is protected from potential misuse and the lab is assured that the software is maintained for as long as needed.

How does virtualization fit in lab’s practices?

The complete process, from registering software to its installations and validation, is taken care of by qualified Intact Digital IT experts, in collaboration with the IT staff that supports the lab and manages the purchase and installations of lab software. Once the lab manager decides to include the specific software into the INTACT Software Library, an Intact Digital project manager is assigned to coordinate the software registration, file uploads into Digital Vault, software installation, and validation (following IQ, OQ, and PQ protocols for virtualized installations) and a configuration of virtual desktops to enable secure remote use of the software and the archived data.

Researchers are involved in creating test protocols (to be used in OQ and PQ) for the software installations and selecting test data for validating software functionality essential for long-term data readability. Once the software validation is completed, the researchers can use their secure computers and virtual desktops running in a standard Web browser to reproduce studies from archived data. A copy of archived study data is securely copied to a virtual machine with the corresponding software to perform the data analysis in the same way as on the original lab PC. This convenient and reliable access to legacy software enables authorized staff to run data analysis protocols during regulatory audits and to use software installations for training and knowledge transfer to new lab staff.

Software virtualization pays off with a multitude of benefits

A single installation of the data analysis software can be used to ensure readability of all the studies that have been conducted with that software version. Thus, the effort involved in securing long-term software use pays off with a multitude of benefits for researchers and lab managers:

- Reduced risks from legacy software failures due to obsolete hardware and security breaches

- Convenient long-term use of virtualized software from secure computers within or outside lab premises

- Easy preparation for regulatory audits

- Compliance with GxP regulations on data retention and access

- Effective knowledge transfer and increased productivity by avoiding redundant work.

OECD guidance on securing the use of legacy software and long-term data integrity through software virtualization demonstrates the forward-thinking that is needed now and will be critical in the future. The computerized systems, including instrument data analysis software, increasingly use automation, AI, machine learning, and data science techniques to accelerate innovation. Ensuring reproducibility and validation of data analysis for such systems requires stable computing environments to re-run data processing protocols and reliably reproduce the results.

Intact Digital is at a forefront of creating methods and building virtual environments for the labs that enable reproducibility of data processing through reliable use of software and algorithms. That is vital for securing long term value of research and innovation.

Related articles:

• Digital obsolescence: Is your research data at risk?

• Long-term data integrity and GxP compliance with virtualized software installations.

Find out more about Intact Digital Ltd and its INTACT Software Library and INTACT Digital Continuity Services.